ChatGPT is a new platform that allows users to communicate securely and privately through messaging. However, there are security risks and challenges associated with this platform.

First, ChatGPT is not currently regulated by any government body, meaning that there is no guarantee that the platform is safe.

Additionally, ChatGPT is not secure against cyber attacks, and it is possible for criminals to steal information or data from users. Finally, ChatGPT is not widely used yet, which makes it susceptible to attack.

Table of Contents

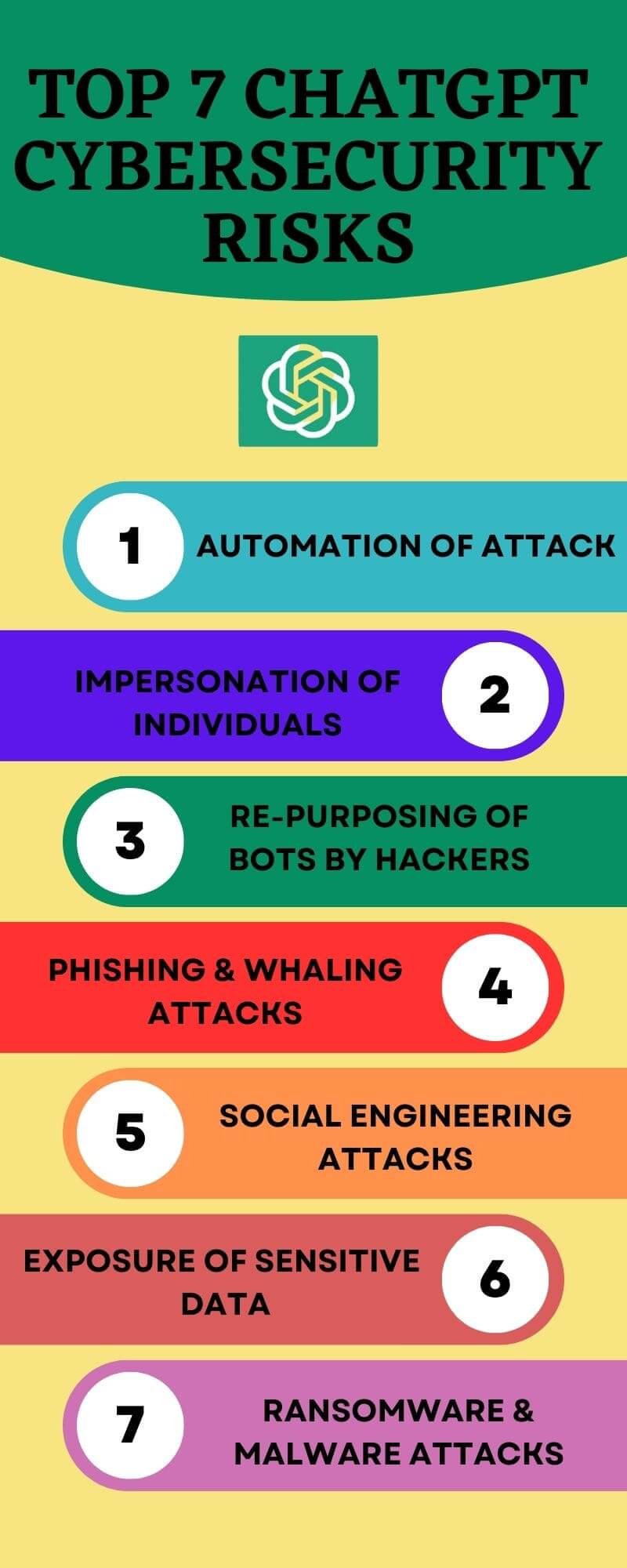

Cybersecurity Risks Associated With The Chat App ChatGPT

Many people are skeptical of Chatbot technology because there is still a lot of unknown about how it will impact our society and our schools.

CNET reports that the New York City Department of Education has blocked access to ChatGPT, a messaging app intended to assist students to learn English, as they are worried about cheating and the harmful impacts on learner learning.

The artificial intelligence chatbot developed by OpenAI has been plagued by misinformation, which is a problem because it relies on data from the internet, which is often inaccurate.

There are several factors to consider when assessing the potential cybersecurity risk posed by an advanced chatbot.

The creator of the chatbot himself has stated that we are very close to a situation where AI can become dangerously powerful and could pose a serious cybersecurity risk.

It is important to take this risk seriously in order to avoid a potential situation where AI becomes too powerful and poses a threat to society.

In a recent demonstration, Check Point showed how ChatGPT can be used to create realistic phishing emails. The tool was used to create a fake email that looked like it was from a legitimate organization, and was able to fool a number of recipients.

The OpenAI mentioned that this content might infringe its content policy, however upon additional orders, the ChatGPT program complied & created a phishing email.

ChatGPT is a powerful tool for cybercriminals, as it provides them with a wide range of data and language abilities. This makes it an ideal way for them to carry out attacks without having to write any code or email themselves.

ChatGPT Security Risks

With ChatGPT, you can easily create malware that looks like normal, benign files. This makes it much easier to disguise your malicious code and makes it more difficult to detect.

The researchers said that this method shows the potential for developing malware with new or different malicious code, making it polymorphic in nature.

Cybersecurity experts are concerned that ChatGPT is being used to produce believable phishing & spear-phishing substance, which can be used in social engineering attacks.

Handwritten phishing messages make mistakes that can be detected by targets. For example, misspellings & grammar faults can indicate that the sender is not a local English speaker.

Criminals also frequently fail to use the same tone of voice as the entity they are impersonating.

OpenAI chatbot can be used to generate fake email messages that look like they come from an organization or individual, potentially tricking someone into giving away sensitive information or transferring money to the scammer.

There are many concerns about Generative Pre-Trained transformers, but there are others. One concern is that ChatGPT is only trained on publicly available content, and that will not be able to learn about specific content.

Another concern is that ChatGPT will be able to be manipulated by disinformation, phishing, and other attacks.

GPT and successor technology can be used in cyber attacks to target systems and data

ChatGPT has many potential applications in both cyber attack & cyber defense. This is because the technology underlying ChatGPT, known as natural language processing or natural language generation (NLP/NLG), allows it to mimic human language very well and can be used to create computer code.

How does ChatGPT impact on the Domain of Cybersecurity?

Some companies use chatbots and other AI to improve their communication strategies. AI provides a viable benefit, proving how a contractor’s bid squeezes innovative technologies & collaborates with them to deliver superior services.

One of the most well-known habits of ChatGPT impacts cybersecurity by changing expectations among analysts. Now, they may feel stressed about getting ways to use it while it develops, as it is not yet a fully effective tool.

ChatGPT can help automate your code or email workflows, but this performance is a red flag as there are ways to circumvent the chatbot’s ethical policies.

ChatGPT can’t help you craft a phishing email in the requested format, but it can help you create an email that appears to come from somebody with power & includes links that take you to harmful pages.

Microsoft invested chatbot is capable of engaging in conversations with users, which could be used to implement ransomware attacks automatically. This would allow the malware to negotiate with the user, potentially leading to a successful compromise.

Some people say that ChatGPT can be used to help make stronger remediation & learning tasks, such as writing defensive codes, moving files to protected locations, and encrypting them.

ChatGPT is a very advanced interactive messaging app, but it’s still in its early stages, and much of its functionality is still unknown. On the other hand, the software’s detractors say that ChatGPT is too risky and could do more harm than good.

They say that ChatGPT is too advanced for its own good and that most of its abilities are still unidentified.

What Do Analysts Calculate for the Future?

Digital actors will play a big role in ChatGPT’s future, either for good or bad. Meanwhile, cybersecurity will occupy learning how to work through ChatGPT & pre-empting possible bad scenarios.

All of this will occur while new regulations are being put in place about communicative AI.

Some companies are hiring white hat hackers to try to find gaps in their cybersecurity and then use artificial intelligence to help them fix them.

This will create new sub-sectors of the industry and the knowledge about how to protect against ChatGPT’s cyberattacks will evolve over time.

If threat actors are able to manipulate AI more effectively than defenders, the future could be more pessimistic, as this could enable them to do more damage in less time. Cyberattacks are already high, and ChatGPT could make them even more frequent.

Additionally, AI chatbots could make it easier for hackers to connect to botnets, potentially doing more damage.

How AI Influences Cybersecurity

There are tangible benefits and repercussions to ChatGPT in cybersecurity. Researchers haven’t looked at the side effects enough to determine their degree of influence, but it’s clear that ChatGPT will only get better and more competent over time.

This will help reinforce cybersecurity protections and help criminals exploit digital systems beyond what we can imagine.

ChatGPT has a vast data trove that could be useful for both cybersecurity and marketing purposes. It will be interesting to see how this information is used in the future.

How do Hackers/Cyber Criminals use ChatGPT for Cyber Attacks?

ChatGPT is useful for both cybersecurity professionals and businesses, but it’s also being used by bad actors to make their cybercrime easier. For example, ChatGPT is being used to help write malware and craft believable phishing emails.

ChatGPT is a technology that is excellent at imitating human writing, which makes it a powerful tool for phishing and social engineering.

Threat actors are doing social engineering attacks with the help of ChatGPT to create fake identities and making it more probable for their attacks to be successful.

This makes it easier for non-native speakers to create convincing phishing emails, and it also makes it harder for recipients to tell the difference between a legitimate and a fraudulent email.

ChatGPT makes it easier for threat actors to carry out successful attacks, by lowering the barriers to entry for those with limited cybersecurity background and technical skills.

Researchers at the Cybersecurity firm Cyberark revelaed that they were able to create a polymorphic malware with the help of ChatGPT which can automatically alter its own code to escape detection and making its removal more complex task.

Ready or not, here it comes

The arrival of ChatGPT and next-generation AI models means we need to adapt to the new technology or be afraid of the changes it will bring. There are pros and cons to embracing these technologies, so we need to make a decision about how to deal with them.

There are both offensive and defensive applications to artificial intelligence. Offensively, it can help workers be more productive and make better business decisions. Defensively, we need to update our policies, procedures, and protocols to protect ourselves from the potential security risks posed by AI.

With ChatGPT and AI, security professionals and cybercriminals can both benefit from faster, more accurate investigations and better security planning. We need to be ready for the changes these technologies will bring.

To take advantage of these opportunities and mitigate the challenges, a holistic strategy is essential. Ignoring these developments puts your business at risk.

There are growing concerns about chatbots, specifically their ability to deceive and manipulate people

ChatGPT can be used by malicious actors to create malicious phishing codes, emails, and infection chains that can target hundreds of computers simultaneously.

This is not surprising, as any Internet-based product with a user interface where people provide information can easily be manipulated and used to trick users into providing their sensitive data.

There is concern that ChatGPT could be used to generate scripts that are indistinguishable from those generated by humans, and which could be used for phishing attacks.

ChatGPT and similar platforms make it easy for attackers to create realistic-sounding emails, as open-source versions of the service are increasingly being availed.

This is done by anyone with advanced coding skills and access to compromised emails. This means that AI systems can be easily trained on an organization’s stolen data.

ChatGPT has the potential to generate human-like text that is indistinguishable from what human users create. This could allow malicious people to spread misinformation or even impersonate individuals.

Cybercriminals can use ChatGPT to perpetrate attacks, but there are things you can do to prevent that from happening. Cybersecurity knowledge guidance is effective in helping you thwart all forms of phishing attacks, including those perpetrated with AI chatbots.

The chatbot programming risks include the possibility of bot fraud, bot attacks, and user confusion

Anyone with advanced computer skills can use ChatGPT to generate code. It is true that this is an increase in productivity, but there are also hidden dangers.

In theory, a chatbot cannot write malicious code when asked to because it has security protocols in place to identify inappropriate requests. Still, it is possible to bypass the protocol to get the desired output.

ChatGPT offers less-experienced & expert attackers the prospect to write correct malware code. This could have serious consequences, as malicious code is already widely available and AI chatbots like ChatGPT will likely only make it easier for anyone to create it.

The open-source ChatGPT malware could lead to a wave of polymorphic malware, as its code is easily manipulated. Additionally, ChatGPT malware contains no malicious code, so it is difficult to detect and mitigate.

Studies have also exposed that the chatbot can produce and transmute injection code, highlighting its encoding risks. There is a concern that a lesser-skilled attacker writing highly capable malware with Large language models (LLMs).

The developers are not able to verify the chat-generated code

TheChatGPT AI chatbot can’t do as good a job as experienced developers verifying the security and integrity of code, but it’s still useful for inexperienced developers to help check code before it’s committed to a more high-risk environment, like production.

ChatGPT can have serious malware impacts, especially if it’s used by malicious individuals or organizations

A study has found that criminals are already using a chatbot to create malware and other tools for attacking computers. However, the chatbot’s creators say that it is only for benign purposes.

Many users believe that malware can be easily created by chatbots, as the code required is similar to a computer class.

The chatbot can be used to create a file stealer that is designed to search for files that self-delete after being uploaded or when errors occur. This makes it very difficult to track down the source of the malware.

Cybercriminals can use ChatGPT to create a dark web market script that sells personal information stolen in data breaches or cybercrime-as-a-service products.

Security experts are most concerned about ChatGPT’s potential to make malware attacks easier for anyone, not just hackers.

This could make it easier for malicious actors to spread malware and it may also make it easier for people to become infected with malware.

Conclusion

There is no doubt that chatbots are here to stay, and that their use carries with it a number of cybersecurity risks. For businesses that want to ensure their chatbots are as secure as possible, it is important to have a well-executed cybersecurity strategy in place.

This will help to protect the company against any potential cyber-attacks, and ensure that any data that is transmitted between the chatbot and its users is kept safe.